Has Your Learning Program Hit Its Objectives?

Learning Program Evaluation

Learning strategies are developed with a key objective in mind. But how will we know if the strategy has been effective? Through learning program evaluation of course!

Learning Program Evaluation: Where To Begin

At the beginning of every L&D project, you should conduct a Learning Needs Analysis (LNA) (also known as a Training Needs Analysis (TNA)) to nut out the program objectives and learning needs. Use these findings to develop the learning outcomes that will shape the learning solution/s.

Once you’ve established the learning outcomes, consider what evaluation strategy is appropriate for the program. Inputs to this decision include factors such as program cost, strategic/operational criticality, audience size/level and compliance/regulatory requirements.

The purpose of an evaluation strategy is to assess the effectiveness of the learning program and materials. It should reflect the learning outcomes to measure whether they have been achieved. There are a number of elements to consider when designing evaluation strategies. Let’s take a closer look at these…

Formative and Summative Evaluation

Firstly, consider how you will use both formative and summative evaluation methods.

Formative evaluation is used to identify any barriers or unexpected opportunities that may emerge throughout program design and development. The feedback gathered during Formative evaluation is used to fine-tune the design, development and implementation of the program, gather reaction and identify what is not working.

Summative evaluation is the process of collecting data following program implementation, in order to determine its effectiveness. It may measure knowledge transfer, performance outcomes, cost factors, and changes in learner behaviour, skills and knowledge.

Levels of Evaluation

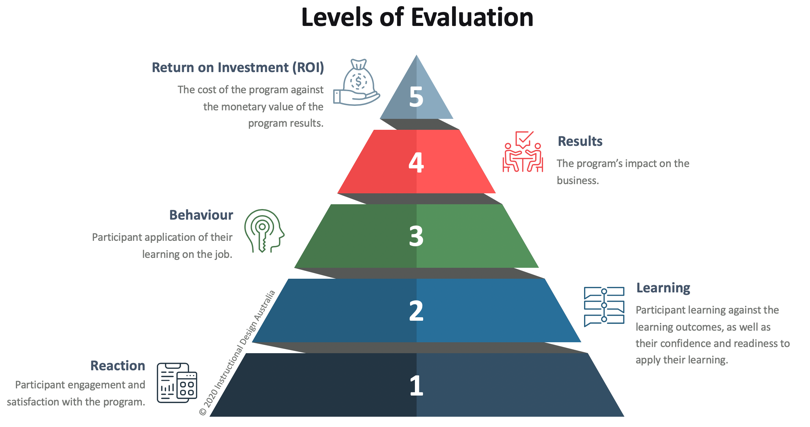

Think about the levels of evaluation that are appropriate to your learning strategy. Typically, five levels of evaluation are considered (with the first three levels the most commonly used).

Adapted from “Evaluating Training Programs” by D. Kirkpatrick, 1994, San Francisco, CA: Berrett-Koehler Publishers, Inc.[1] & “The Value of Learning: How Organisations Capture Value and ROI” by P. Phillips & J. Phillips, 2007, Pfeiffer. .[2]

Donald Kirkpatrick’s model of evaluation consists of four levels:

- Reaction

- Learning

- Behaviour

- Results [1]

Reaction measures participant engagement and satisfaction with the program.

Learning measures participant learning against the learning outcomes, as well as their confidence and readiness to apply their learning.

Behaviour measures participant application of their learning on the job.

Results measures the program’s impact on the business.

Jack and Patti Phillips developed a fifth level of evaluation:

- Return On Investment (ROI) [2]

Return On Investment measures the cost of the program against the monetary value of the program results.

Evaluating program effectiveness at levels 4 and 5 is an involved process, as it requires isolation of learning impacts on job performance from any other potential variables. It can be a costly process.

For this reason, evaluation at levels 1, 2 and 3 is conducted more frequently, with key findings communicated to relevant stakeholders.

Data Collection

Once you’ve identified the evaluation levels, consider evaluation approaches to be used for collection of both qualitative and quantitative data.

Quantitative (objective) data is represented in numeric values (quantity). Methods of quantitative data collection include:

- Questionnaires

- Surveys

- Assessment results

Example question: ‘Please rate your level of agreement for the following statements…’

Qualitative (subjective) data is not measurable, but provides valid and valuable insights into program effectiveness. Methods of qualitative data collection include:

- Focus groups

- Participant observations

- Open-question surveys

Example question: ‘What has changed about your work (actions, tasks, activities) as a result of this training/workshop?’

A mixture of quantitative and qualitative data should be collected to provide an accurate overview of learning program effectiveness. [3]

Whist quantitative data may provide information on participant development of knowledge, skills and behaviours from the learning program, qualitative data provides insights as to why the trends exist. For example, findings from quantitative data may illustrate that, whilst participants felt competent and confident in transferring their learning to the workplace, they found it difficult to apply in practice. Qualitative data may, in this case, reveal that the participant did not receive the opportunity to apply new skills and knowledge in the first 30 days post-learning.

Timing of Data Collection

Once the ‘how’ of data collection is determined, the next decision is around ‘when’.

Consider collecting data at several stages during and after the program. This will increase the accuracy of the findings and provides an overall picture of the effectiveness of the program.

Pre

Pre-workshop data collection provides qualitative information on participant current levels of competence and confidence. This will be used as a benchmark to evaluate participant progress.

Post

Data collection immediately following the program highlights new and emerging capability changes, intended application and anticipated barriers or enablers to application.

Post 30+ Days

Gathering data 30+ days after the program identifies actual knowledge, skill and behavioural shift and/or unanticipated barriers or enablers.

Benefits of Learning Program Evaluation

Evaluation determines the effectiveness of a program, including the implementation of participant learning and any impacts on the business. It identifies what works and what needs to change.

Evaluation can be a key to effective learning solutions.

Related Articles and Blogs

- Feedback and Formative Assessment in a Virtual Learning Environment

- Evaluation Pack templates

- Conducting a Learning Needs Analysis (LNA) in High-Risk Industries

- The History of ADDIE

Read more of our blog articles here.

References

[1] Kirkpatrick, D., (1994). Evaluating Training Programs. San Francisco, CA: Berrett-Koehler Publishers, Inc.

[2] Phillips, P and Phillips, J. (2007) The Value of Learning: How Organisations Capture Value and ROI. Pfeiffer

[3] ATSDR (2015). Principles of Community Engagement: Chapter 7: Program Evaluation. Retrieved from https://www.atsdr.cdc.gov/communityengagement/pce_program_methods.html